Fuck AI

I want to apologize for changing the description without telling people first. After reading arguments about how AI has been so overhyped, I'm not that frightened by it. It's awful that it hallucinates, and that it just spews garbage onto YouTube and Facebook, but it won't completely upend society. I'll have articles abound on AI hype, because they're quite funny, and gives me a sense of ease knowing that, despite blatant lies being easy to tell, it's way harder to fake actual evidence. I also want to factor in people who think that there's nothing anyone can do. I've come to realize that there might not be a way to attack OpenAI, MidJourney, or Stable Diffusion. These people, which I will call Doomers from an AIHWOS article, **are perfectly welcome here.** You can certainly come along and read the [AI Hype Wall Of Shame](https://criticalai.org/the-ai-hype-wall-of-shame/), or the [diminishing returns of Deep Learning.](https://spectrum.ieee.org/deep-learning-computational-cost) Maybe one can even become a Mod! Boosters, or people who heavily use AI and see it as a source of good, ARE NOT ALLOWED HERE! I've seen Boosters dox, threaten, and harass artists over on Reddit and Twitter, and they constantly champion artists losing their jobs. They go against the very purpose of this community. *If I hear a comment on here saying that AI is "making things good" or cheering on putting anyone out of a job, and the commenter does not retract their statement, said commenter will be permanently banned.* FA&FO.

Alright, I just want to clarify that I've never modded a Lemmy community before. I just have the mantra of "if nobody's doing the right thing, do it yourself". I was also motivated by the decision from u/spez to let an unknown AI company use Reddit's imagery. If you know how to moderate well, please let me know. Also, feel free to discuss ways to attack AI development, and if you have evidence of AIBros being cruel and remorseless, make sure to save the evidence for people "on the fence". Remember, *we don't know if AI is unstoppable.* AI uses up loads of energy to be powered, and tons of circuitry. There may very well be an end to this cruelty, and it's up to us to begin that end.

gizmodo.com

gizmodo.com

**A Massachusetts couple claims that their son's high school attempted to derail his future by giving him detention and a bad grade on an assignment he wrote using generative AI.** >An old and powerful force has entered the fraught debate over generative AI in schools: litigious parents angry that their child may not be accepted into a prestigious university. > In what appears to be the first case of its kind, at least in Massachusetts, a couple has sued their local school district after it disciplined their son for using generative AI tools on a history project. Dale and Jennifer Harris allege that the Hingham High School student handbook did not explicitly prohibit the use of AI to complete assignments and that the punishment visited upon their son for using an AI tool—he received Saturday detention and a grade of 65 out of 100 on the assignment—has harmed his chances of getting into Stanford University and other elite schools. Yeah, I'm 100% with the school on this one.

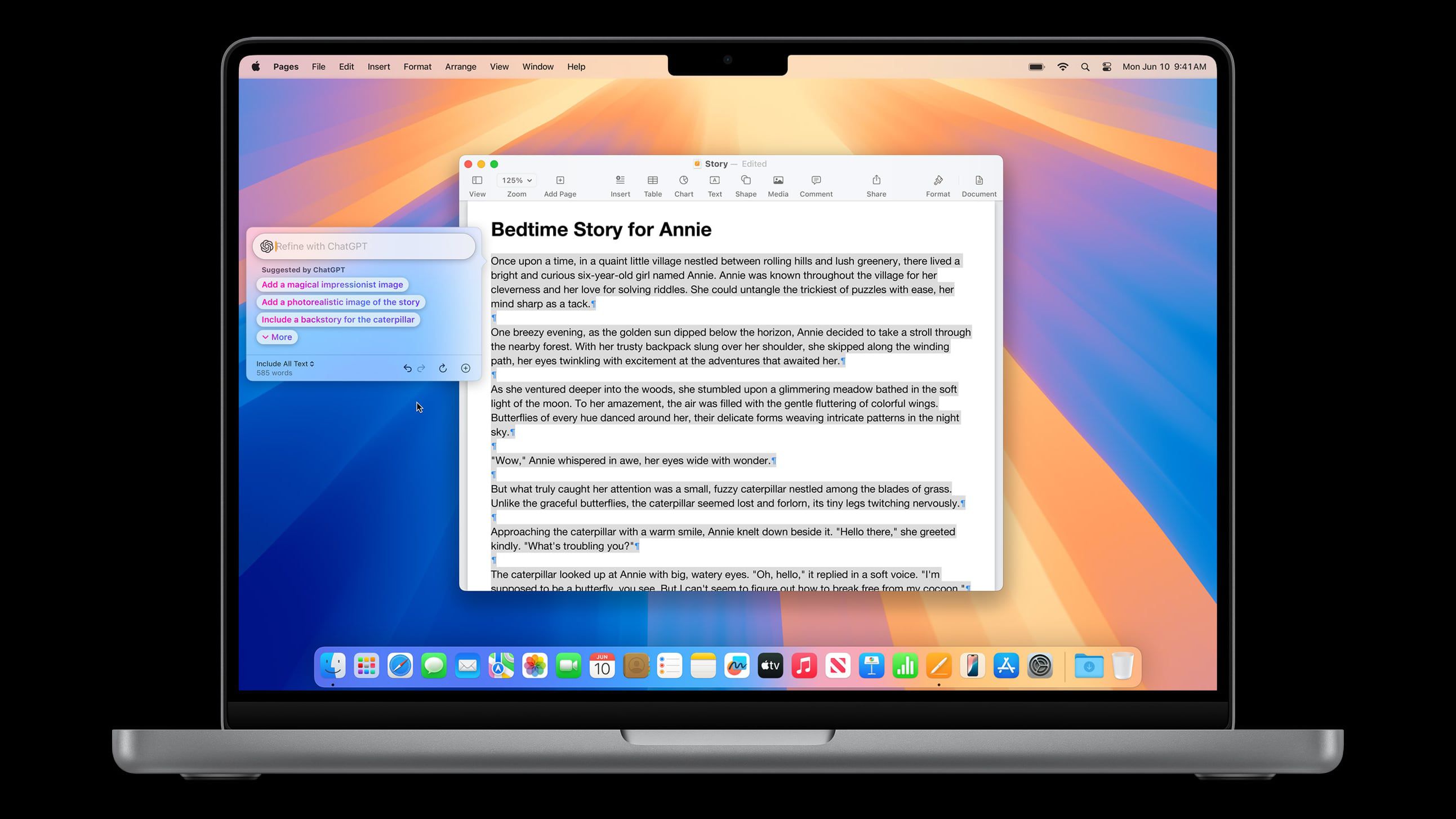

www.macrumors.com

www.macrumors.com

...and Graphene stands alone.

www.rfi.fr

www.rfi.fr

Widely shared on social media, the atmospheric black and white shots -- a mother and her child starving in the Great Depression; an exhausted soldier in the Vietnam war -- may look at first like real historic documents. But they were created by artificial intelligence, and researchers fear they are muddying the waters of real history. "AI has caused a tsunami of fake history, especially images," said Jo Hedwig Teeuwisse, a Dutch historian who debunks false claims online. "In some cases, they even make an AI version of a real old photo. It is really weird, especially when the original is very famous."

nypost.com

nypost.com

A European delivery company had to disable its AI chatbot after it started swearing at a customer and admitting it was the “worse delivery firm in the world.” Dynamic Parcel Distribution (DPD) had to turn off its AI chatbot feature after disgruntled UK customer Ashley Beauchamp managed to get it to swear at him and write a disparaging poem.

Can enter domain, image, etc. They also have a Do Not Train Registry and API for models to use in respect of it

techcrunch.com

techcrunch.com

Instagram head Adam Mosseri on Friday addressed the moderation issues that saw Instagram and Threads users losing access to their accounts, having posts disappear, and more, saying that the company “found mistakes” that it’s attributing to human moderators. The company did not initially blame faulty AI systems, as many believed would be the case. In a post on Threads, Mosseri addressed the issue that has been plaguing the social platforms over the past several days, adding that the mistakes it’s found so far were due to content reviewers — people, not automated systems — “making calls without being provided the context on how conversations played out, which was a miss.” His explanation doesn’t seem to fully account for the range of issues that users were experiencing, as some found their accounts erroneously labeled as belonging to a user under the age of 13, then disabled. It’s unclear how a human moderator would have made this assumption. In addition, according to a report by The Verge, even after a user submitted their ID to verify their age, their account still remained disabled.

omny.fm

omny.fm

As of last week, OpenAI is now worth $157 billion - yet below the hood is a far darker story. In this episode, Ed Zitron explains the cold, hard truth - that OpenAI is a terrible business that burns billions of dollars, and its failure to scale its cloud business tells a dark tale about the wider generative AI industry.

Using AI Hawk's Auto Jobs Applier bot, I applied for 17 jobs in an hour on LinkedIn.

- AI expert Gary Marcus says OpenAI may be forced to become a surveillance company to make money. - AI could be leveraged to supercharge surveillance efforts. It's a scenario he calls "Orwellian." - He said the company's employees should voice their concerns. Archive : https://archive.ph/gRoOF

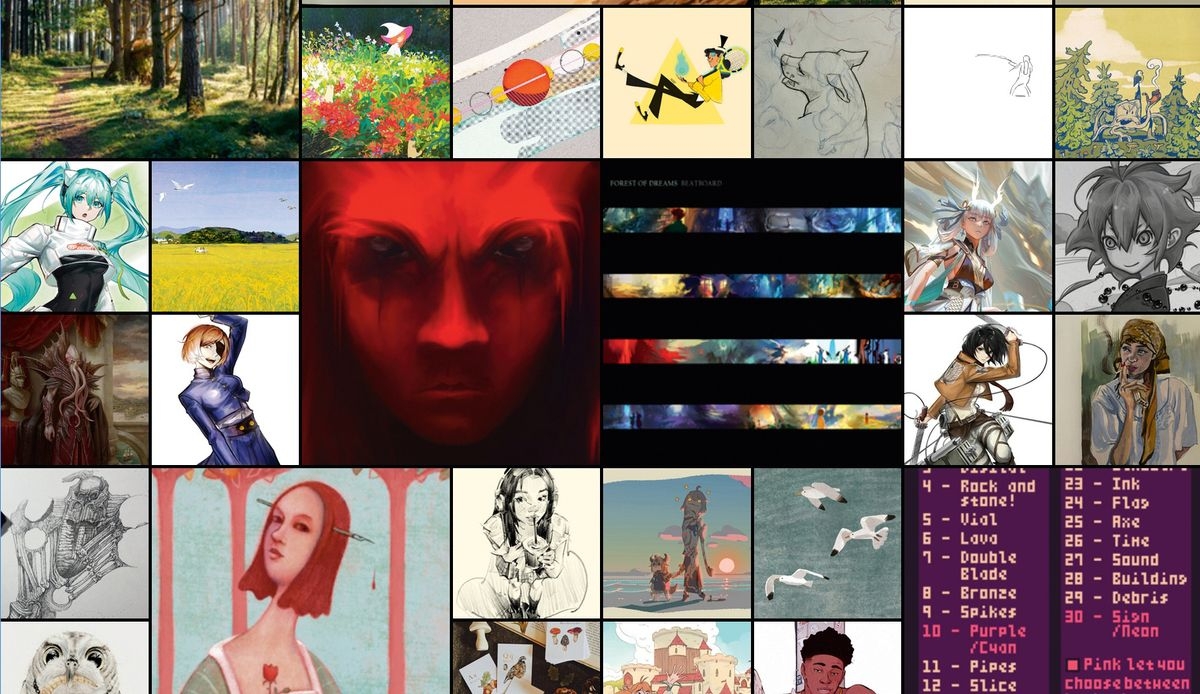

www.creativebloq.com

www.creativebloq.com

We talk to founder Jingna Zhang about the portfolio and social app that’s dedicated to supporting the art community.

arstechnica.com

arstechnica.com

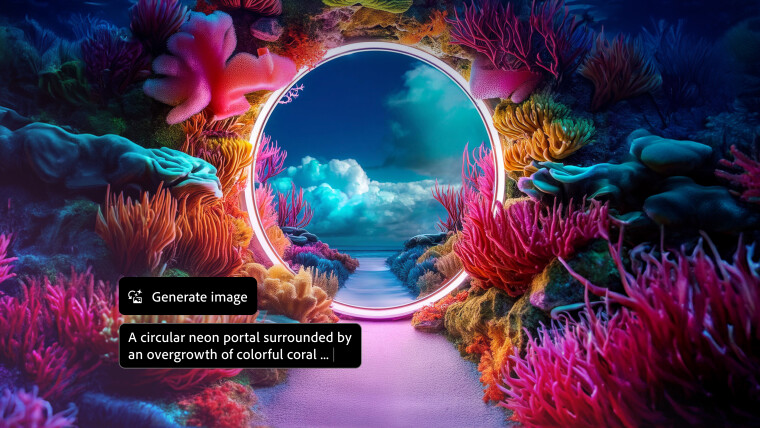

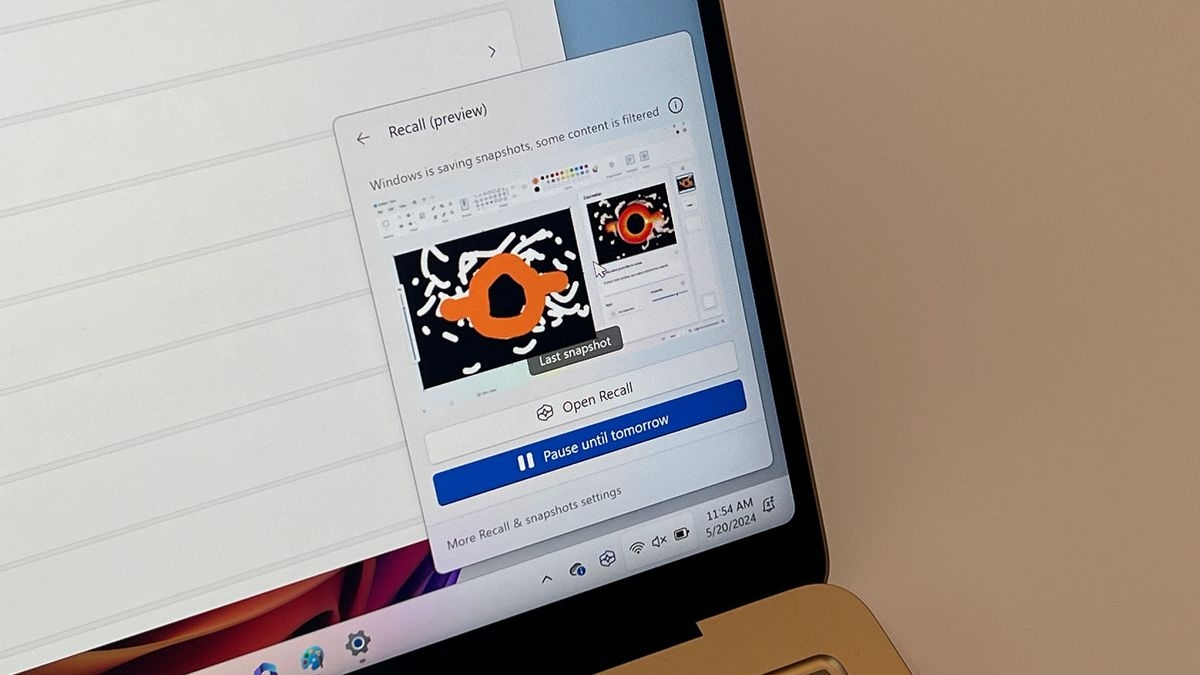

On Monday, Microsoft unveiled updates to its consumer AI assistant Copilot, introducing two new experimental features for a limited group of $20/month Copilot Pro subscribers: Copilot Labs and Copilot Vision. Labs integrates OpenAI's latest o1 "reasoning" model, and Vision allows Copilot to see what you're browsing in Edge. Microsoft says Copilot Labs will serve as a testing ground for Microsoft's latest AI tools before they see wider release. The company describes it as offering "a glimpse into 'work-in-progress' projects." The first feature available in Labs is called "Think Deeper," and it uses step-by-step processing to solve more complex problems than the regular Copilot. Think Deeper is Microsoft's version of OpenAI's new o1-preview and o1-mini AI models, and it has so far rolled out to some Copilot Pro users in Australia, Canada, New Zealand, the UK, and the US.

futurism.com

futurism.com

Jason Allen, the AI "artist" whose image he created with Midjourney won a fine arts competition two years ago, is still mad that the government won't let him copyright his opus — and, in an amazing lack of self-awareness, is also crying that his work is being stolen as a result. The prizewinning image, "Théâtre D'opéra Spatial," was deemed to not wholly exhibit human authorship because a significant amount of it — as Allen himself disclaimed — was AI-generated, the US Copyright Office said in a ruling last September. As such, Allen could only claim credit for specific portions of the image that he created with Photoshop — not the thing as a whole. Now he's making another appeal, Creative Bloq reports, complaining that he's losing money to the tune of "several million dollars" because, without a copyright, his work is being used without his approval. Does this argument ring any bells?

www.404media.co

www.404media.co

Udemy, an e-learning platform with more than 250,000 online classes, recently announced that it would train generative AI on the classes that its users contribute to the site. Not only were class teachers automatically opted in to having their classes used as training, Udemy said teachers would have only a three-week "window" to opt-out of training. That window has now passed. "We want to officially announce that the opt-out period for our Generative AI Program (GenAI Program) begins today, August 21st, and goes through September 12th. The choice to participate in the GenAI program is yours. If you want to participate, no action is needed!," Udemy said in a post on its community forums August 21. In an "Instructor Generative AI Policy" document, it says it plans to offer "Annual Periods designated by us" during which instructors can opt-out of having their classes trained on, and said that when people opt-out of training, it will remove the instructors' classes from its dataset "by the end of the calendar year." It has also told instructors that "By opting out, you'll lose access to all AI features and benefits, which may affect your course visibility and potential earnings." With the first opt-out window having passed, instructors are now seeing a grayed-out option in their settings if they didn't know about the window or would like to opt-out now.

::: spoiler alt-text A user asks Bing/Copilot chat to summarize an invented article: [User] summarize ``` https://www.nytimes.com/2024/09/29/us/dinosaurs-found-roaming-playground.html ``` Copilot The article from The New York Times reports a surprising and amusing incident where life-sized dinosaur replicas were found roaming a playground in a small town. These realistic models, part of a local museum's exhibit, were accidentally released due to a security malfunction. The sight of dinosaurs in the playground caused quite a stir among the residents, especially the children, who were both thrilled and startled. The museum has since apologized and retrieved the dinosaurs, ensuring such an incident won't happen again. The event has sparked a lot of local interest and laughter, turning into a memorable story for the community[1]. ::: Note this is problematic for a number of reasons including someone who wants to summarize a real article/thread won’t be informed an accurate summary may be impossible.

... and neither does the author (or so I believe - I made them both up). On the other hand, AI is definitely good at creative writing.

www.techradar.com

www.techradar.com

No thanks, if I have a powerful PC/laptop with NPU. I prefer to run AI locally on Linux

www.cio.com

www.cio.com

> “Many developers say AI coding assistants make them more productive, but a recent study set forth to measure their output and found no significant gains. Use of GitHub Copilot also introduced 41% more bugs, according to the study from Uplevel” study referenced: [Can GenAI Actually Improve Developer Productivity?](https://resources.uplevelteam.com/gen-ai-for-coding) (requires email)

Consumers could end up paying the (personalized) price as AI becomes more popular, FTC Chair Lina Khan recently warned. At the 2024 Fast Company Innovation Festival, Khan said that although AI may be beneficial, it's already becoming some of the FTC's "bread and butter fraud work." "Some of these AI tools are turbocharging that fraud because they allow some of these scams to be disseminated much more quickly, much more cheaply, and on a much broader scale," she said. AI is already helping automate classic online scams like phishing and even introducing new, alarming frauds like voice cloning that can target unsuspecting consumers. But Khan also took the opportunity to talk about a different way AI could be used to target consumers: retailers using surveillance technology and customer data to change the prices they offer to specific shoppers. Khan said the FTC is looking into AI's potential role in increasing the risk of price discrimination. Archive : https://archive.is/Hzxt1

arstechnica.com

arstechnica.com

For the past few years, a conspiracy theory called "Dead Internet theory" has picked up speed as large language models (LLMs) like ChatGPT increasingly generate text and even social media interactions found online. The theory says that most social Internet activity today is artificial and designed to manipulate humans for engagement. On Monday, software developer Michael Sayman launched a new AI-populated social network app called SocialAI that feels like it's bringing that conspiracy theory to life, allowing users to interact solely with AI chatbots instead of other humans. It's available on the iPhone app store, but so far, it's picking up pointed criticism. After its creator announced SocialAI as "a private social network where you receive millions of AI-generated comments offering feedback, advice & reflections on each post you make," computer security specialist Ian Coldwater quipped on X, "This sounds like actual hell." Software developer and frequent AI pundit Colin Fraser expressed a similar sentiment: "I don’t mean this like in a mean way or as a dunk or whatever but this actually sounds like Hell. Like capital H Hell."

www.cartoonbrew.com

www.cartoonbrew.com

Lionsgate has become the first significant Hollywood studio to go all-in on AI. The company today announced a “first-of-its-kind” partnership with AI research company Runway to create and train an exclusive new AI model based on its portfolio of film and tv content. Lionsgate’s exclusive model will be used to generate what it calls “cinematic video” which can then be further iterated using Runway’s technology. The goal is to save money – “millions and millions of dollars” according to Lionsgate studio vice chairman Michael Burns – by having filmmakers and creators use its AI model to replace artists in production tasks such as storyboarding. In corporate jargon terminology, Burns said that AI will be used to “develop cutting-edge, capital-efficient content creation opportunities.” He added that “several of our filmmakers are already excited about its potential applications to their pre-production and post-production process.”

arstechnica.com

arstechnica.com

As AI deepfakes sow doubt in legitimate media, anyone can claim something didn't happen.

[Original post.](https://fosstodon.org/@BigAngBlack/113172490447094339)

cross-posted from: https://lemmy.ml/post/20513234

www.cnbc.com

www.cnbc.com

- Jim Kavanaugh, CEO of World Wide Technology, told CNBC that people are “too smart” to accept artificial intelligence won’t alter their work environment. - Business leaders shouldn’t “BS” employees about the impact of AI on jobs, Kavanaugh said, adding that they should be as transparent and honest as possible. - Kavanaugh, who has a net worth of $7 billion, stressed that overall he’s an optimist when it comes to AI and its ability to improve productivity.

cross-posted from: https://lemmy.world/post/19885024 > Media and search engines nowadays need a flag system and a filter for AI junk results